Machines That Think Like Us: Insights from the NeuroAI Workshop in Florence

How artificial and biological intelligence are converging on shared principles

On July 9th 2025, Z. Sage Chen (NYU) and I organized "The BRAIN 2.0 NeuroAI" workshop at the Organization for Computational Neurosciences (OCNS) annual meeting in Florence. The workshop brought together several scientists working at the intersection between Natural and Artificial Intelligence research. The energy was high and one could feel the enthusiasm in the air: for the first time in human history, we have machines that appear to learn, remember, and make decisions in ways that mirror core aspects of human cognition. This creates an unprecedented opportunity: we can now understand our minds by building artificial systems that think and behave like us.

The workshop conversations were bi-directional. In one direction, people asked: what can the strategies and mechanisms of artificial networks tell us about how we function? In another, we collectively asked: can we leverage what we are constantly learning about neuroscience to build better AI? After all, the energy efficiency and flexibility of animal brains is unmatched by state-of-the-art artificial agents.

This represents a remarkable shift from traditional approaches. Instead of studying brains and machines in isolation, we're using them to inform each other. The artificial systems we create serve as hypotheses about how intelligence works, hypotheses we can test, modify, and refine in ways that would be impossible with biological systems alone. Throughout the workshop, a fascinating tension emerged: the most accurate models of neural activity may not be the most interpretable or biologically meaningful ones—a fundamental tradeoff that shapes how we understand minds.

Sage (left) and I (right)

The Mystery of How the Brain Learns

The development and application of backpropagation to deep networks created watershed moments that contributed to the modern AI era. While backpropagation itself was developed earlier, Geoff Hinton's 2006 work on deep belief networks and especially the 2012 AlexNet breakthrough that won the ImageNet competition marked the real turning points. The backpropagation algorithm allows neural networks to learn by computing how errors in behavioral outputs should change weights throughout the network and has shown remarkable learning efficiency. It works by propagating error signals backward through the network, telling each connection exactly how to adjust to reduce mistakes.

Since then, backpropagation has become the backbone of modern deep learning, powering everything from image recognition to language models. But here's the puzzle: this remarkable learning efficiency is likely mirrored by the brain, yet we don't know what the analogous biological algorithm is. The brain cannot implement standard backpropagation, as it lacks the requisite backward connectivity and global, vectorized error signals that artificial networks rely on.

Traditional Computational Neuroscience has long proposed Hebbian learning ("cells that fire together, wire together") as the brain's learning mechanism. While Hebbian learning is biologically plausible and occurs throughout the nervous system, its classical formulations lack the credit assignment specificity of backpropagation. Hebbian learning can strengthen connections between simultaneously active neurons, but it struggles to determine which specific connections are responsible for errors in complex, multilayered networks. This creates a fundamental gap: the brain needs backpropagation-like credit assignment to learn complex behaviors, but it can only implement local plasticity rules.

This gap was a central theme throughout our workshop, with speakers presenting different pieces of what might be a larger puzzle. Rui Ponte Costa's work on different learning mechanisms across neural circuits presents a compelling case for partitioning the credit assignment problem across different substrates distributed throughout the brain. For example, the cortex learns via self-supervised learning but can be influenced by fast predictive subcortical machinery to adjust its representations quickly and flexibly. This parallels some of our own work on thalamocortical interactions, including our longstanding collaboration with Sage Chen's lab. Gaspard Olivier presented his PhD work with Rafal Bogacz, showcasing the lab's work on predictive learning achieving backpropagation-like performance (under certain conditions) using purely local mechanisms. Nao Uchida demonstrated how distributional reinforcement learning (where the brain represents entire probability distributions of future rewards rather than simple averages) could provide another piece of the credit assignment puzzle. The emerging picture suggests that the brain's backpropagation parallel is likely a combination of these mechanisms working together, rather than any single biological algorithm.

From Lillicrap et al., 2020 Nat Rev Neurosci (Backpropagation and the brain)

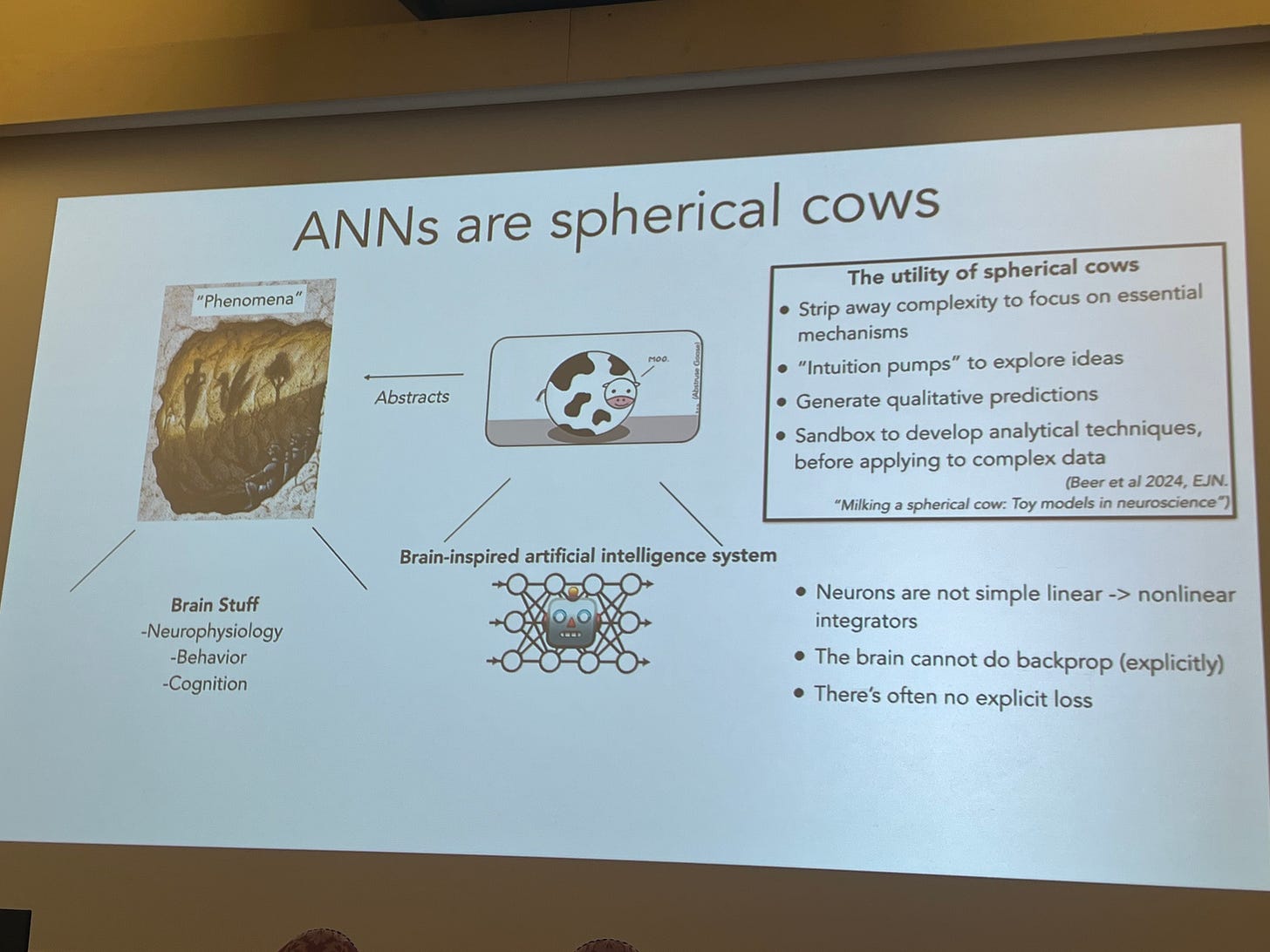

Rui Costa: Three Pillars of Brain-Like Intelligence

Rui presented "three pillars" of intelligent systems:

1. World Models (Unsupervised Learning): The neocortex builds internal models of the world through self-supervised learning. Recent work suggests that local cortical circuits may have evolved specifically to support this kind of learning, with Layer 2/3 neurons predicting future inputs due to processing delays, while Layer 5 neurons integrate predictions from both the thalamus and cortical predictions.

2. Model Fine-tuning (Reinforcement Learning): Dopamine adjusts prefrontal cortex activity, thereby fine-tuning the world model that guides learning throughout the brain. This goes beyond classical RL formulations; it's a sophisticated meta-learning system.

3. Flexible Behavior: The cerebellum and hippocampus work together as predictive systems, with the cerebellum providing high-dimensional, fast predictions while the hippocampus offers more compressed, memory-based guidance. Remarkably, this work shows that combining a cerebellum-inspired system with a fixed RNN performs better on zero-shot learning tasks than purely plastic networks. This connects directly to our work: we have built a series of models (Aditya Gilra 2018, Ali Hummos 2022, Wei-Long Zheng 2024) that all rely on a similar mechanism of fixed PFC RNN and a fast subcortical modulator to enable flexibility (and maybe generalization). Importantly, the cerebellum communicates with prefrontal cortex through the mediodorsal thalamus, creating a pathway for rapid, predictive learning that doesn't require extensive retraining of cortical circuits.

What makes Costa's framework interesting is its grounding in optimization theory. Rather than describing these systems phenomenologically, he's showing how they might emerge from the brain's need to solve specific computational problems efficiently. His lab has demonstrated that cortical circuits can approximate deep learning algorithms, that the cerebellum enables rapid adaptation through "feedback decoupling," and that cholinergic modulation implements a kind of attention mechanism for learning.

Rui Ponte Costa (Oxford)

Tatiana Engel: Digital Twins and Latent Circuit Models

Tatiana Engel, from Princeton's Neuroscience Institute, presented groundbreaking work that spans two critical areas: the challenges of neural "digital twins" and her innovative latent circuit model approach.

Her work on "digital twins" (RNNs trained to reproduce neural population dynamics) revealed a fundamental limitation in how we think about brain models. When Engel's team trained RNNs to match neural activity patterns, they found that these "twin" networks could reproduce the data beautifully. But when they tried to use these twins to predict the effects of neural perturbations, the results were not awesome, to put it mildly. Different twin networks that matched the same data equally well made completely different predictions about how the brain would respond to interventions.

This failure isn't just a technical problem. It reveals something deep about the nature of biological intelligence. The brain operates in a low-dimensional space of meaningful solutions, while artificial networks explore the full high-dimensional space of possible solutions. Even when they converge on the same behavior, they're often implementing completely different computational strategies.

Engel's solution is elegant: instead of training twins to match all neural activity, train them to capture the essential low-dimensional structure that actually matters for computation. This "latent circuit" approach trades some descriptive accuracy for genuine predictive power. Her latent circuit model is a dimensionality reduction approach in which task variables interact via low-dimensional recurrent connectivity to produce behavioral output. Unlike traditional correlation-based dimensionality reduction methods, the latent circuit model incorporates recurrent interactions among task variables to implement the computations necessary to solve the task.

Crucially, Engel demonstrated that when you constrain RNNs to have fewer neurons, forcing them into lower-dimensional regimes, something remarkable happens: they begin to show more structured, interpretable behavior. However, this improvement in interpretability and biological plausibility comes with a tradeoff—there's a reduction in their ability to perfectly match the complex, high-dimensional neural activity patterns. This finding highlights a fundamental tension in computational neuroscience: the most accurate models of neural activity may not be the most interpretable or biologically meaningful ones.

When applied to recurrent neural networks trained on context-dependent decision-making tasks, her latent circuit model revealed a suppression mechanism in which contextual representations inhibit irrelevant sensory responses. Most remarkably, when she applied the same method to prefrontal cortex recordings from monkeys performing the same task, they found similar suppression of irrelevant sensory responses—contrasting sharply with previous analyses using correlation-based methods that had found no such suppression.

The key insight is that dimensionality reduction methods that do not incorporate causal interactions among task variables are biased toward uncovering behaviorally irrelevant representations. Engel's work demonstrates that incorporating the recurrent interactions that implement task computations is essential for identifying the neural mechanisms that actually drive behavior.

Tatiana Engel (Princeton)

Miller's Hybrid Models: Bridging AI and Classical Cognition

Kevin Miller from DeepMind's Neuroscience Lab presented work on hybrid neural-cognitive models that bridge classical cognitive frameworks with modern machine learning. Miller's approach combines the interpretability of traditional cognitive models with the predictive power of neural networks, creating systems that can both explain and predict behavior.

His work addresses a fundamental challenge in computational cognitive science: classical cognitive models are interpretable but often limited in their predictive accuracy, while neural networks can achieve high performance but remain black boxes. Miller's hybrid RNNs and disentangled architectures attempt to get the best of both worlds, maintaining the transparency needed for scientific understanding while achieving the performance necessary for practical applications.

The implications extend beyond just better models. As Miller noted, there may be an inherent tension between complexity and interpretability that reflects something fundamental about how we communicate and reason about intelligent systems. This connects to broader questions about whether the most accurate models of cognition are necessarily the ones we can understand and explain to others.

Kevin Miller (Google Deep Mind)

Sen Song: Hierarchical Reasoning Models

Sen Song presented compelling work on the Sapient project's Hierarchical Reasoning Model (HRM), a novel recurrent architecture that challenges conventional approaches to AI reasoning. What makes this work particularly compelling is its demonstration that recurrent transformers can achieve sophisticated reasoning capabilities that current large language models struggle with.

The HRM operates through two interdependent recurrent modules: a high-level module responsible for slow, abstract planning, and a low-level module handling rapid, detailed computations. This architecture is directly inspired by hierarchical processing in the brain, where different cortical areas operate at distinct timescales (slow theta waves for high-level planning and fast gamma waves for detailed processing).

What's remarkable is the efficiency: with only about 1000 training examples, the HRM (~27M parameters) outperforms much larger Chain-of-Thought models on challenging benchmarks like the Abstraction and Reasoning Corpus (ARC), Sudoku-Extreme, and complex maze navigation tasks. The model solves these tasks directly from inputs without requiring explicit chain-of-thought supervision.

This work suggests something profound about the future of AI architecture. By introducing recurrence back into transformers, we might finally achieve what's been missing in current LLMs: spontaneous activity and genuine thought-like processes. As Song noted, the recurrent dynamics could enable the kind of internal mental simulation that characterizes real reasoning, rather than just sophisticated pattern matching.

Sen Song (Tsighua Univerity) presenting Sapient

Panel Discussion 1 (left to right: Song, Engel, Ponte Costa, Miller)

Dan Levenstein: NeuroAI as Theory Development

Dan Levenstein's work may be among the clearest examples of how AI modeling can generate new theories about core brain functions like memory and planning. Dan presented his work with Blake Richards and Adrien Peyrache, which represents concrete progress in understanding how biological neural networks might actually implement sophisticated learning algorithms. Most significant is Dan's recent bioRxiv paper with Peyrache and Richards on "Sequential predictive learning is a unifying theory for hippocampal representation and replay". This work addresses one of the most fundamental questions in neuroscience: how does the hippocampus both form cognitive maps and generate the offline "replay" sequences that support memory consolidation and planning?

The breakthrough comes from training recurrent neural networks to predict egocentric sensory inputs as an agent moves through simulated environments. Levenstein and colleagues found that spatially tuned cells emerge from all forms of predictive learning, but offline replay only emerges when networks use recurrent connections and head-direction information to predict multi-step observation sequences. This promotes the formation of a continuous attractor that reflects the geometry of the environment (essentially a neural cognitive map).

What's remarkable is that these offline trajectories showed wake-like statistics, autonomously replayed recently experienced locations, and could be directed by a virtual head direction signal. Networks trained to make cyclical predictions of future observation sequences were able to rapidly learn cognitive maps and produced sweeping representations of future positions reminiscent of hippocampal theta sweeps.

This work suggests that hippocampal theta sequences reflect a circuit that implements a data-efficient algorithm for sequential predictive learning. The framework provides a unifying theory that connects spatial representation, memory replay, and theta sequences under a single computational principle: the brain's drive to predict future sensory experiences.

What makes this work particularly significant is that it doesn't require exotic new mechanisms. It leverages well-known properties of dendrites, synapses, and synaptic plasticity that already exist in cortical circuits. The burst-dependent plasticity rule essentially allows the brain to implement a form of top-down credit assignment that rivals artificial backpropagation algorithms, but using purely local, biologically plausible mechanisms.

This represents exactly the kind of theory development that NeuroAI enables: using optimization principles and machine learning insights to understand how evolution might have solved fundamental computational problems in neural circuits.

Couldn’t find a picture of Dan— but this slide easily wins the coolest slide award of the workshop (Dan is starting his lab at Yale in August!)

Gaspard Olivier: Predictive Learning Frameworks

Gaspard Olivier presented work from Rafal Bogacz's recent Nature Neuroscience paper "Inferring neural activity before plasticity as a foundation for learning beyond backpropagation." Olivier's approach centers on the concept of energy machines (physical mechanical analogies that provide an intuitive understanding of how energy-based networks achieve sophisticated learning).

The key insight from Bogacz's work, which Olivier built upon, is the principle of "prospective configuration." Unlike backpropagation, which modifies weights first and then observes the resulting change in neural activity, prospective configuration works in reverse: neural activity changes first to match the desired output, and then synaptic weights are modified to consolidate this prospective activity pattern.

Olivier's energy machine framework visualizes this process elegantly. In these mechanical systems, neural activity corresponds to the vertical position of nodes sliding on posts, synaptic connections correspond to rods connecting the nodes, and the energy function corresponds to the elastic potential energy of springs. When the system "relaxes" by minimizing energy, it naturally settles into the prospective configuration (the neural activity pattern that the network should produce after learning).

This framework solves a fundamental problem in biological learning: how to implement credit assignment without the precise backward information flow that backpropagation requires. As Olivier demonstrated, the relaxation process in energy-based networks inherently "foresees" the effects of potential weight changes and compensates for them dynamically, avoiding the catastrophic interference that plagues backpropagation.

The practical implications are profound. Olivier's work shows that energy-based learning can outperform backpropagation in biologically relevant scenarios like online learning, continual learning across multiple tasks, and learning with limited data (precisely the challenges that biological systems face). The energy machine framework provides both the theoretical foundation and the intuitive understanding for why evolution might have favored such learning mechanisms over more direct optimization approaches.

This represents a crucial piece of the credit assignment puzzle, demonstrating how the brain might implement sophisticated learning algorithms through local, energy-based computations that are both biologically plausible and computationally superior to artificial alternatives.

Couldn’t find a picture of Gaspard— so this is the cool figure from the paper and the framework he discussed

Nao Uchida: Distributional Reinforcement Learning

Nao Uchida's presentation began with a fascinating origin story about how DeepMind reached out to him following the groundbreaking success of Deep Q-Networks (DQN). The 2015 Nature paper "Human-level control through deep reinforcement learning" had demonstrated that artificial agents could learn to play Atari games directly from pixel inputs, achieving superhuman performance across dozens of games. This breakthrough sparked intense interest in understanding whether similar computational principles might be operating in biological brains.

DeepMind's collaboration with Uchida led to the landmark 2020 Nature paper "A distributional code for value in dopamine-based reinforcement learning" by Dabney, Kurth-Nelson, Uchida, and colleagues. This work revolutionized our understanding of how the brain represents value and reward. Rather than encoding just the mean expected reward (as traditional reinforcement learning theory suggested), Uchida's team discovered that dopamine neurons encode entire probability distributions of future rewards.

The key insight was that different dopamine neurons have different "expectile codes": some neurons are optimistic (responding more to positive prediction errors), others are pessimistic (responding more to negative prediction errors), and still others fall somewhere in between. This diversity in dopamine neuron responses, which had long puzzled neuroscientists, suddenly made computational sense: the brain wasn't just learning average rewards, but was representing the full uncertainty and variability of future outcomes.

This distributional approach explains why dopamine neurons show such heterogeneous responses to the same stimuli. Rather than being noise or biological messiness, this diversity serves a crucial computational function. It allows the brain to represent not just "how much reward am I likely to get?" but "what's the full range of possible rewards, and how likely is each outcome?"

Uchida then pivoted to discuss his most recent Nature paper with Alexandre Pouget: "Multi-timescale reinforcement learning in the brain." This work revealed another fundamental aspect of dopamine diversity: different dopamine neurons operate with different discount factors from temporal difference (TD) learning algorithms. Some neurons focus on immediate rewards (high discount factors), while others consider longer-term consequences (low discount factors).

This discovery provides a neurobiological foundation for why humans and animals can balance immediate gratification with long-term planning. Instead of having a single, universal discount factor, the brain maintains a population of neurons with different temporal horizons, allowing for more flexible and adaptive decision-making.

What makes Uchida's work particularly compelling in the NeuroAI context is that it represents systems neuroscience at its most computationally rigorous. Rather than simply describing what neurons do, his approach tests specific algorithmic hypotheses about how neural circuits implement learning and decision-making. This is precisely what systems neuroscience in the AI age should be: using computational theories to generate testable predictions about neural mechanisms, then using the results to refine both our understanding of the brain and our artificial intelligence algorithms.

The broader implications are profound: if the brain implements distributional reinforcement learning with multiple timescales, this suggests that current AI systems (which typically use single discount factors and mean-based value representations) are missing crucial computational advantages that biological systems have evolved. Understanding these biological algorithms could lead to more robust, adaptive, and efficient artificial intelligence systems.

Nao Uchida (Harvard)

Looking Forward: Intelligence as Optimization

What made Florence special wasn't just the individual insights, but their convergence around a central theme: machines are becoming our best models for understanding minds not because they copy neural architecture, but because they solve the same optimization problems that evolution has been working on for millions of years.

Perhaps the most profound insight was recognizing a fundamental trade-off that shapes both artificial intelligence and human cognition: the tension between predictive power and interpretability. Recent work has shown that tiny recurrent neural networks, sometimes with just 1-4 units, can outperform much larger networks at predicting animal behavior. Yet the models that best predict behavior are often the hardest to understand, while the models we can understand often predict behavior poorly.

This "interpretability paradox" might be fundamental to how minds work. When you explain your decision-making process to a friend, you're not giving them access to your neural network weights. You're constructing a simplified, interpretable model that captures the essential logic while losing the messy details. Evolution may have equipped us with simple, communicable heuristics precisely because they're interpretable, even though more complex processes actually drive our behavior.

Whether it's Uchida's work revealing how dopamine neurons encode probability distributions of rewards, Dan's demonstrations that predictive learning can unify spatial representation and replay, or Costa's three-pillar architecture showing how world models emerge from optimization principles, the common thread is that intelligence arises from solving computational problems efficiently under biological constraints.

This represents a profound shift in how we think about the relationship between brains and machines. We're not trying to build artificial brains. We're trying to understand the computational principles that both biological and artificial systems must discover to be intelligent. Instead of asking "How does the brain work?" researchers are asking "What computational problems does intelligence solve, and what are the optimal solutions?"

The implications extend beyond academic understanding. If biological systems have evolved superior learning algorithms like distributional reinforcement learning, prospective configuration, or hierarchical reasoning models, then incorporating these insights could lead to more sample-efficient, robust, and adaptable artificial intelligence systems.

As the workshop concluded, the participants seemed to recognize they were grappling with fundamental questions about intelligence that will likely shape the next decade of research. The conversation has only just begun, but the direction is becoming clearer: understanding intelligence requires understanding the optimization problems that both biological and artificial systems must solve.

Organizing this workshop with Sage was one of the most intellectually energizing experiences I've had. It reinforced the idea that if we want to understand intelligence—biological or artificial—we need both neurons and networks, both brains and machines.

Panel discussion 2 (Left to right: honorary panelist Ken Miller (Columbia), Kevin Miller (Deep Mind), Gaspard Oliviers (Oxford), Dan Levenstein (Montreal—>Yale), Nao Uchida (Harvard), Sen Song (Tsinghua).

The OCNS 2025 NeuroAI workshop took place July 9th, 2025 in Florence, Italy. The insights presented here represent the collective wisdom of dozens of researchers pushing the boundaries of our understanding of intelligence.

Appreciate your taking time to provide summary

The digital twin findings are particularly noteworthy. Be worth exploring further.

Thanks for sharing this info, and you might also find this interesting:

Unlike other animals, the human brain uses 67% more energy for cognition versus sensory-motor regions [1] which is powered partly by special energy-efficient mitochondria [2].

Also unlike other animals, the human brain involves parallel information transmission via white matter tracts [3], and synaptic connectivity flows via a directed acyclic graph (DAG) in layer 2-3 pyramidal neurons [4].

Single neuron recordings also find that human learning may not be context dependent unlike in rodents and monkeys [5]. How context invariant is AI such as LLMs?

[1] https://pubmed.ncbi.nlm.nih.gov/38091393/

[2] https://pubmed.ncbi.nlm.nih.gov/40140564/

[3] https://pubmed.ncbi.nlm.nih.gov/38081838/

[4] https://pubmed.ncbi.nlm.nih.gov/38635709/

[5] https://pubmed.ncbi.nlm.nih.gov/39823228/